Main Article Content

Abstract

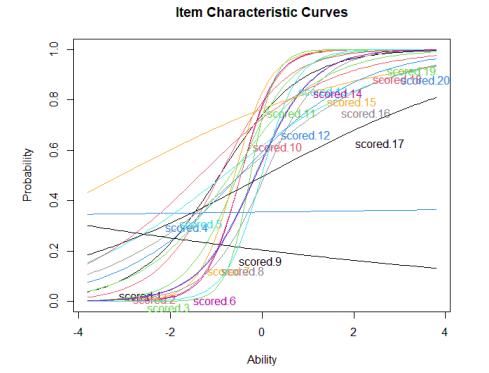

This study was conducted using the Item Responses Theory (IRT) method with R program to comprehensively analyze quality of Mathematics Even Semester Final Assessment test in class VIII for 2021/2022 Academic Year. This assessment was created through a collaborative effort involving mathematics teachers from a Public Junior High School in Binjai. It consists of 20 multiple-choice questions, each with four alternative answers. Furthermore, the study followed a descriptive framework carried out by a quantitative methodology. The data source was the responses of 189 students in class VIII who took part in Mathematics Even Semester Final Assessment for the 2021/2022 Academic Year, collected using documentation methods. The results showed that the items developed by the teacher: (1) were most appropriate for analysis using a two-parameter logistic model (2-PL), (2) distribution of material achieved during the even semester on the item tested was uneven, (3) eight of the 20 item was acceptable and kept in the question bank, while the remaining 12 were of poor quality; (4) the item fell into the category of easy to moderate difficulty, dominated by item in the moderate category, and (5) Mathematics Even Semester Final Assessment in class VIII provided accurate information regarding students’ mathematics ability at moderate ability levels ( to ).

Keywords

Article Details

Copyright (c) 2023 Nadilla Rahmadani, Kana Hidayati

This work is licensed under a Creative Commons Attribution 4.0 International License.

This journal provides immediate open access to its content on the principle that making research freely available to the public supports a greater global exchange of knowledge.

All articles published Open Access will be immediately and permanently free for everyone to read and download. We are continuously working with our author communities to select the best choice of license options, Creative Commons Attribution-ShareAlike (CC BY-NC-SA).

References

- Aiken, L. R. (1994). Psychological testing and assessment (8th ed.). Boston: Allyn and Bacon.

- Ali, & Istiyono, E. (2022). An analysis of item response theory using program R. Al-Jabar: Jurnal Pendidikan Matematika, 13(1), 109–122. https://doi.org/http://dx.doi.org/10.24042/ajpm.v13i1.11252

- Almaleki, D. (2021). Examinee Characteristics and their Impact on the Psychometric Properties of a Multiple Choice Test According to the Item Response Theory (IRT). Engineering, Technology & Applied Science Research, 11(2), 6889–6901. https://doi.org/https://doi.org/10.48084/etasr.4056

- Amelia, R. N., & Kriswantoro. (2017). Implementation of Item Response Theory as a Base for Analysis of Question Item Quality and Chemistry Ability of Yogyakarta City Students [in Bahasa]. JKPK (Jurnal Kimia Dan Pendidikan Kimia), 2(1), 1–12. https://doi.org/10.20961/jkpk.v2i1.8512

- Anastasi, A. (1988). Psychological testing (6th ed.). New York: Mc Millan.

- Anastasi, A., & Urbina, S. (1997). Psychological Testing (7th ed.). New Jersey: Prentice-Hall Inc.

- Anggoro, B. S., Agustina, S., Komala, R., Komarudin, Jermsittiparsert, K., & Widyastuti. (2019). An Analysis of Students’ Learning Style, Mathematical Disposition, and Mathematical Anxiety toward Metacognitive Reconstruction in Mathematics Learning Process Abstract. Al-Jabar: Jurnal Pendidikan Matematika, 10(2), 187–200. https://doi.org/10.24042/ajpm.v10i2.3541

- Ashraf, Z. A., & Jaseem, K. (2020). Classical and Modern Methods in Item Analysis of Test Tools. International Journal of Research and Review, 7(5), 397–403. https://doi.org/10.5281/zenodo.3938796

- Awopeju, O. A., & Afolabi, E. R. I. (2016). Comparative Analysis of Classical Test Theory and Item Response Theory Based Item Parameter Estimates of Senior School Certificate Mathematics Examination. European Scientific Journal, 12(28). https://doi.org/10.19044/esj.2016.v12n28p263

- Azizah, & Wahyuningsih, S. (2020). Use of the Rasch Model for Analysis of Test Instruments in Actuarial Mathematics Courses [in Bahasa]. JUPITEK: Jurnal Pendidikan Matematika, 3(1), 45–50. https://doi.org/10.30598/jupitekvol3iss1ppx45-50

- Baker, F. B. (2001). The basics of item response theory (Second). USA: ERIC.

- Christian, D. S., Prajapati, A. C., Rana, B. M., & Dave, V. R. (2017). Evaluation of multiple choice questions using item analysis tool : a study from a medical institute of Ahmedabad , Gujarat. International Journal of Community Medicine and Public Health, 4(6), 1876–1881. https://doi.org/10.18203/2394-6040.ijcmph20172004

- Dehnad, A., Nasser, H., & Hosseine, A. F. (2014). A Comparison between Three-and Four-Option Multiple Choice Questions. Procedia - Social and Behavioral Sciences, 98, 398–403. https://doi.org/10.1016/j.sbspro.2014.03.432

- DeMars, C. E. (2010). Item response theory: Understandings statistics measurement. United Kingdom: Oxford University Press.

- Esomonu, N., & Okeke, O. J. (2021). French Language Diagnostic Writing Skill Test For Junior Secondary School Students: Construction And Validation Using Item Response Theory. International Journal of Education and Social Science Research ISSN, 4(02), 334–350. https://doi.org/10.37500/IJESSR.2021.42

- Essen, C. B., Idaka, I. E., & Metibemu, M. A. (2017). Item level diagnostics and model-data fit in item response theory (IRT) using BILOG-MG v3. 0 and IRTPRO v3. 0 programmes. Global Journal of Educational Research, 16(2), 87–94. https://doi.org/10.4314/gjedr.v16i2.2

- Finch, W. H., & French, B. F. (2015). Latent variable modeling with R. United Kingdom: Routledge.

- Gronlund, N. E. (1985). Constructing Achievement Test. New Jersey: Prentice-Hall Inc.

- Hambleton, R. K., & Swaminathan, H. (2013). Item response theory: Principles and applications. New York: Springer Science & Business.

- Hambleton, R. K., Swaminathan, H., & Rogers, H. J. (1991). Fundamentals of item response theory. London: Sage Publication.

- Hamidah, N., & Istiyono, E. (2022). The quality of test on National Examination of Natural science in the level of elementary school. International Journal of Evaluation and Research in Education, 11(2), 604–616. https://doi.org/10.11591/ijere.v11i2.22233

- Herosian, M. Y., Sihombing, Y. R., & Pulungan, D. A. (2022). Item Response Theory Analysis on Student Statistical Literacy Tests. Pedagogik: Jurnal Pendidikan, 9(2), 203–215.

- Himelfarb, I. (2019). A primer on standardized testing: History, measurement, classical test theory, item response theory, and equating. Journal of Chiropractic Education, 33(2), 151–163. https://doi.org/10.7899/JCE-18-22

- Hutabarat, I. M. (2009). Analysis of Question Items with Classical Test Theory and Item Response Theory [in Bahasa]. Pythagoras: Jurnal Pendidikan Matematika, 5(2), 1–13. https://doi.org/10.21831/pg.v5i2.536

- Ismail, S. M., Rahul, D. R., Patra, I., & Rezvani, E. (2022). Formative vs summative assessment: impacts on academic motivation, attitude toward learning, test anxiety, and self‑regulation skill. Language Testing in Asia, 12(40), 1–23. https://doi.org/10.1186/s40468-022-00191-4

- Istiyono, E., Mardapi, D., & Suparno, S. (2014). Development of a high-level physics thinking ability test (pysthots) for high school students [in Bahasa]. Jurnal Penelitian Dan Evaluasi Pendidikan, 18(1), 1–12. https://doi.org/10.21831/pep.v18i1.2120

- Jabrayilov, R., Emons, W. H. M., & Sijtsma, K. (2016). Comparison of Classical Test Theory and Item Response Theory in Individual Change Assessment. Applied Psychological Measurement, 40(8). https://doi.org/https://doi.org/10.1177/0146621616664046

- Johnson, M., & Majewska, D. (2022). Formal, non-formal, and informal learning: What are they, and how can we research them? Cambridge University Press & Assessment Research Report.

- Kaplan, R. M., & Saccuzzo, D. P. (2017). Psychological testing: Principles, applications and issues. Boston: Cengage Learning.

- Kaya, Z., & Tan, S. (2014). New trends of measurement and assessment in distance education. Turkish Online Journal of Distance Education, 15(1), 206–217. https://doi.org/10.17718/tojde.30398

- Keeves, J. P., & Alagumalai, S. (1999). New approaches to measurement. Advances in Measurement in Educational Research and Assessment, 23–42. https://doi.org/10.1016/B978-008043348-6%2F50002-4

- KÖSE, İ. A. (2014). Assessing model data fit of unidimensional item response theory models in simulated data. Educational Research and Reviews, 9(17), 642–649. https://doi.org/10.5897/ERR2014.1729

- Lin, S. (2018). Item Analysis of English Grammar Achievement Test. Mandalay University of Foreign Languages Research Journal, 9(1), 13–20.

- Linden, W. J., & Hambleton, R. K. (1997). Item response theory: Brief history, common models, and extensions. In In Handbook of modern item response theory (pp. 1–28). New York: Springer.

- Mahanani. (2015). Analysis of international competitions and assessments for schools (ICAS) questions using item response theory (IRT) and classical test theory (CTT) methods [in Bahasa]. Skripsi Diterbitkan. Semarang: Jurusan Biologi FMIPA Universitas Negeri Semarang.

- Mardapi, D. (2012). Educational Measurement, Assessment and Evaluation [in Bahasa]. Yogyakarta: Nuha Litera.

- Miller, D., Linn, R., & Gronlund, N. (2012). Measurement and Assessment Teaching. New York: Pearson Education Limited.

- Moreta-Herrera, R., Perdomo-Pérez, M., Reyes-Valenzuela, C., Gavilanes-Gómez, D., Rodas, J. A., & Rodríguez-Lorenzana, A. (2023). Analysis from the classical test theory and item response theory of the Satisfaction with Life Scale (SWLS) in an Ecuadorian and Colombian sample. Journal of Human Behavior in the Social Environment. https://doi.org/https://doi.org/10.1080/10911359.2023.2187915

- Muchlisin, M., Mardapi, D., & Setiawati, F. A. (2019). An analysis of Javanese language test characteristic using the Rasch model in R program. Research and Evaluation in Education, 5(1), 61–74. https://doi.org/10.21831/reid.v5i1.23773

- Murphy, K. R., & Davidshofer, C. O. (2005). Psychological testing principles and applications (Fouth, Ed.). New Jersey: Prentice-Hall Inc.

- Paek, I., & Cole, K. (2020). Using R for Item Response Theory Model Applications. United Kingdom: Routledge.

- Palimbong, J., Mujasam, & Allo, A. Y. T. (2018). Item Analysis Using Rasch Model in Semester Final Exam Evaluation Study Subject in Physics Class X TKJ SMK Negeri 2 Manokwari. Kasuari: Physics Education Journal, 1(1), 43–51. https://doi.org/10.37891/kpej.v1i1.40

- Pratama, G. P., & Pinayani, A. (2019). Effect of Learning Style on Learning Outcomes with Mediator Variable Learning Motivation. 3rd ICEEBA International Conference on Economics, Education, Business and Accounting, KnE Social Sciences, 2019, 808–819. https://doi.org/10.18502/kss.v3i11.4052

- Primi, C., Morsanyi, K., Chiesi, F., Donati, M. A., & Hamilton, J. (2015). The Development and Testing of a New Version of the Cognitive Reflection Test Applying Item Response Theory (IRT). Journal of Behavioral Decision Making, 29(5), 453–469. https://doi.org/https://doi.org/10.1002/bdm.1883

- Purwanto. (2019). Educational Goals and Learning Outcomes: Domains and Taxonomies [in Bahasa]. Jurnal Teknodik, 9(16), 146–164. https://doi.org/10.32550/teknodik.v0i0.541

- Ramos, J. L. S., Dolipas, B. B., & Villamor, B. B. (2013). Higher order thinking skills and academic performance in physics of college students: A regression analysis. International Journal of Innovative Interdisciplinary Research, 4, 48–60.

- Retnawati, H. (2013). Evaluation of educational programs [in Bahasa]. Jakarta: Universitas Terbuka.

- Retnawati, H. (2014). Item response theory and its applications for researchers, measurement and testing practitioners, graduate students [in Bahasa]. Yogyakarta: Nuha Medika.

- Retnawati, H., & Hadi, S. (2014). The regional bank system is calibrated to welcome the era of decentralization [in Bahasa]. Jurnal Ilmu Pendidikan, 20(2), 183–193. https://doi.org/10.17977/jip.v20i2.4615

- Rizbudiani, A. D., Jaedun, A., Rahim, A., & Nurrahman, A. (2021). Rasch model item response theory (IRT) to analyze the quality of mathematics final semester exam test on system of linear equations in two variables (SLETV). Al-Jabar: Jurnal Pendidikan Matematika, 12(2), 399–412. https://doi.org/10.24042/ajpm.v12i2.9939

- Santoso, A., Kartianom, K., & Kassymova, G. K. (2019). Quality of statistics question bank items (Case study: Open University statistics course final exam instrument) [in Bahasa]. Jurnal Riset Pendidikan Matematika, 6(2), 165–176. https://doi.org/10.21831/jrpm.v6i2.28900

- Sarea, M. S., & Ruslan, R. (2019). Characteristics of Question Items: Classical Test Theory vs Item Response Theory? [in Bahasa]. Didaktika : Jurnal Kependidikan, 13(1), 1–16. https://doi.org/10.30863/didaktika.v13i1.296

- Sarvina, Y. (2017). Utilization of Open Source "R" Software for Agroclimate Research [in Bahasa]. Informatika Pertanian, 26(1), 23–30. https://doi.org/10.21082/ip.v26n1.2017.p23-30

- Shanti, M. R. S., Istiyono, E., Munadi, S., Permadi, C., Patiserlihun, A., & Sudjipto, D. N. (2020). Physics Question Assessment Analysis Using the Rasch Model with the R Program [in Bahasa]. Jurnal Sains Dan Edukasi Sains, 3(2), 46–52. https://doi.org/10.24246/juses.v3i2p46-52

- Subali, B., Kumaidi, Aminah, N. S., & Sumintono, B. (2019). Student Achievement Based On The Use Of Scientific Method In The Natural Science Subject In Elementary School. Jurnal Pendidikan IPA Indonesia, 8(1), 39–51. https://doi.org/10.15294/jpii.v8i1.16010

- Sudaryono. (2011). Implementation of Item Response Theory in the Assessment of Final Learning Outcomes in Schools [in Bahasa]. Jurnal Pendidikan Dan Kebudayaan, 17(6), 719–732. https://doi.org/10.24832/jpnk.v17i6.62

- Talebi, G. A., Ghaffari, R., Eskandarzadeh, E., & Oskouei, A. E. (2013). Item Analysis an Effective Tool for Assessing Exam Quality, Designing Appropriate Exam and Determining Weakness in Teaching. Res Dev Med Educ, 2(2), 69–72. https://doi.org/10.5681/rdme.2013.016

- Thepsathit, P., Jongsooksai, P., Bowornthammarat, P., Saejea, V., & Chaimongkol, N. (2022). Data Analysis in Polytomous Item Response Theory Using R. Journal of Educational Measurement, 28(2), 13–26.

- Tilaar, A. L. F., & Hasriyanti. (2019). Analysis of Odd Semester Question Items in Mathematics Subjects in Junior High Schools [in Bahasa]. JP3I (Jurnal Pengukuran Psikologi Dan Pendidikan Di Indonesia), 8(1), 57–68. https://doi.org/10.15408/jp3i.v8i1.13068

- Tirta, I. M. (2015). Development of online interactive item response analysis using R for dichotomous responses with logistic models (1-PL, 2-PL, 3-PL) [in Bahasa]. Seminar Nasional Pendidikan Matematika. FKIP Universitas Jember.

- Ulitzsch, E., Davier, M. von, & Pohl, S. (2020). A Multiprocess Item Response Model for Not- Reached Items due to Time Limits and Quitting. Educational and Psychological Measurement, 80(3), 522–547. https://doi.org/10.1177/0013164419878241

- Winarno, Zuhri, M., Mansur, Sutomo, I., & Widhyahrini, K. (2019). Development of Assessment for the Learning of the Humanistic Model to Improve Evaluation of Elementary School Mathematics. International Journal of Instruction, 12(4), 49–64. https://doi.org/10.29333/iji.2019.1244a

- Wu, M., Tam, H. P., & Jen, T.-H. (2016). Educational measurement for applied researchers. Singapore: Springer Singapore.

- Yamamoto, K., Shin, H. J., & Khorramdel, L. (2018). Multistage Adaptive Testing Design in International Large-Scale Assessments. Educational Measurement: Issues and Practice, 0(0), 1–12. https://doi.org/10.1111/emip.12226

- Zainuddin, Z. (2018). Students’ learning performance and perceived motivation in gamified flipped-class instruction. Computers & Education, 126, 75–88. https://doi.org/10.1016/j.compedu.2018.07.003

References

Aiken, L. R. (1994). Psychological testing and assessment (8th ed.). Boston: Allyn and Bacon.

Ali, & Istiyono, E. (2022). An analysis of item response theory using program R. Al-Jabar: Jurnal Pendidikan Matematika, 13(1), 109–122. https://doi.org/http://dx.doi.org/10.24042/ajpm.v13i1.11252

Almaleki, D. (2021). Examinee Characteristics and their Impact on the Psychometric Properties of a Multiple Choice Test According to the Item Response Theory (IRT). Engineering, Technology & Applied Science Research, 11(2), 6889–6901. https://doi.org/https://doi.org/10.48084/etasr.4056

Amelia, R. N., & Kriswantoro. (2017). Implementation of Item Response Theory as a Base for Analysis of Question Item Quality and Chemistry Ability of Yogyakarta City Students [in Bahasa]. JKPK (Jurnal Kimia Dan Pendidikan Kimia), 2(1), 1–12. https://doi.org/10.20961/jkpk.v2i1.8512

Anastasi, A. (1988). Psychological testing (6th ed.). New York: Mc Millan.

Anastasi, A., & Urbina, S. (1997). Psychological Testing (7th ed.). New Jersey: Prentice-Hall Inc.

Anggoro, B. S., Agustina, S., Komala, R., Komarudin, Jermsittiparsert, K., & Widyastuti. (2019). An Analysis of Students’ Learning Style, Mathematical Disposition, and Mathematical Anxiety toward Metacognitive Reconstruction in Mathematics Learning Process Abstract. Al-Jabar: Jurnal Pendidikan Matematika, 10(2), 187–200. https://doi.org/10.24042/ajpm.v10i2.3541

Ashraf, Z. A., & Jaseem, K. (2020). Classical and Modern Methods in Item Analysis of Test Tools. International Journal of Research and Review, 7(5), 397–403. https://doi.org/10.5281/zenodo.3938796

Awopeju, O. A., & Afolabi, E. R. I. (2016). Comparative Analysis of Classical Test Theory and Item Response Theory Based Item Parameter Estimates of Senior School Certificate Mathematics Examination. European Scientific Journal, 12(28). https://doi.org/10.19044/esj.2016.v12n28p263

Azizah, & Wahyuningsih, S. (2020). Use of the Rasch Model for Analysis of Test Instruments in Actuarial Mathematics Courses [in Bahasa]. JUPITEK: Jurnal Pendidikan Matematika, 3(1), 45–50. https://doi.org/10.30598/jupitekvol3iss1ppx45-50

Baker, F. B. (2001). The basics of item response theory (Second). USA: ERIC.

Christian, D. S., Prajapati, A. C., Rana, B. M., & Dave, V. R. (2017). Evaluation of multiple choice questions using item analysis tool : a study from a medical institute of Ahmedabad , Gujarat. International Journal of Community Medicine and Public Health, 4(6), 1876–1881. https://doi.org/10.18203/2394-6040.ijcmph20172004

Dehnad, A., Nasser, H., & Hosseine, A. F. (2014). A Comparison between Three-and Four-Option Multiple Choice Questions. Procedia - Social and Behavioral Sciences, 98, 398–403. https://doi.org/10.1016/j.sbspro.2014.03.432

DeMars, C. E. (2010). Item response theory: Understandings statistics measurement. United Kingdom: Oxford University Press.

Esomonu, N., & Okeke, O. J. (2021). French Language Diagnostic Writing Skill Test For Junior Secondary School Students: Construction And Validation Using Item Response Theory. International Journal of Education and Social Science Research ISSN, 4(02), 334–350. https://doi.org/10.37500/IJESSR.2021.42

Essen, C. B., Idaka, I. E., & Metibemu, M. A. (2017). Item level diagnostics and model-data fit in item response theory (IRT) using BILOG-MG v3. 0 and IRTPRO v3. 0 programmes. Global Journal of Educational Research, 16(2), 87–94. https://doi.org/10.4314/gjedr.v16i2.2

Finch, W. H., & French, B. F. (2015). Latent variable modeling with R. United Kingdom: Routledge.

Gronlund, N. E. (1985). Constructing Achievement Test. New Jersey: Prentice-Hall Inc.

Hambleton, R. K., & Swaminathan, H. (2013). Item response theory: Principles and applications. New York: Springer Science & Business.

Hambleton, R. K., Swaminathan, H., & Rogers, H. J. (1991). Fundamentals of item response theory. London: Sage Publication.

Hamidah, N., & Istiyono, E. (2022). The quality of test on National Examination of Natural science in the level of elementary school. International Journal of Evaluation and Research in Education, 11(2), 604–616. https://doi.org/10.11591/ijere.v11i2.22233

Herosian, M. Y., Sihombing, Y. R., & Pulungan, D. A. (2022). Item Response Theory Analysis on Student Statistical Literacy Tests. Pedagogik: Jurnal Pendidikan, 9(2), 203–215.

Himelfarb, I. (2019). A primer on standardized testing: History, measurement, classical test theory, item response theory, and equating. Journal of Chiropractic Education, 33(2), 151–163. https://doi.org/10.7899/JCE-18-22

Hutabarat, I. M. (2009). Analysis of Question Items with Classical Test Theory and Item Response Theory [in Bahasa]. Pythagoras: Jurnal Pendidikan Matematika, 5(2), 1–13. https://doi.org/10.21831/pg.v5i2.536

Ismail, S. M., Rahul, D. R., Patra, I., & Rezvani, E. (2022). Formative vs summative assessment: impacts on academic motivation, attitude toward learning, test anxiety, and self‑regulation skill. Language Testing in Asia, 12(40), 1–23. https://doi.org/10.1186/s40468-022-00191-4

Istiyono, E., Mardapi, D., & Suparno, S. (2014). Development of a high-level physics thinking ability test (pysthots) for high school students [in Bahasa]. Jurnal Penelitian Dan Evaluasi Pendidikan, 18(1), 1–12. https://doi.org/10.21831/pep.v18i1.2120

Jabrayilov, R., Emons, W. H. M., & Sijtsma, K. (2016). Comparison of Classical Test Theory and Item Response Theory in Individual Change Assessment. Applied Psychological Measurement, 40(8). https://doi.org/https://doi.org/10.1177/0146621616664046

Johnson, M., & Majewska, D. (2022). Formal, non-formal, and informal learning: What are they, and how can we research them? Cambridge University Press & Assessment Research Report.

Kaplan, R. M., & Saccuzzo, D. P. (2017). Psychological testing: Principles, applications and issues. Boston: Cengage Learning.

Kaya, Z., & Tan, S. (2014). New trends of measurement and assessment in distance education. Turkish Online Journal of Distance Education, 15(1), 206–217. https://doi.org/10.17718/tojde.30398

Keeves, J. P., & Alagumalai, S. (1999). New approaches to measurement. Advances in Measurement in Educational Research and Assessment, 23–42. https://doi.org/10.1016/B978-008043348-6%2F50002-4

KÖSE, İ. A. (2014). Assessing model data fit of unidimensional item response theory models in simulated data. Educational Research and Reviews, 9(17), 642–649. https://doi.org/10.5897/ERR2014.1729

Lin, S. (2018). Item Analysis of English Grammar Achievement Test. Mandalay University of Foreign Languages Research Journal, 9(1), 13–20.

Linden, W. J., & Hambleton, R. K. (1997). Item response theory: Brief history, common models, and extensions. In In Handbook of modern item response theory (pp. 1–28). New York: Springer.

Mahanani. (2015). Analysis of international competitions and assessments for schools (ICAS) questions using item response theory (IRT) and classical test theory (CTT) methods [in Bahasa]. Skripsi Diterbitkan. Semarang: Jurusan Biologi FMIPA Universitas Negeri Semarang.

Mardapi, D. (2012). Educational Measurement, Assessment and Evaluation [in Bahasa]. Yogyakarta: Nuha Litera.

Miller, D., Linn, R., & Gronlund, N. (2012). Measurement and Assessment Teaching. New York: Pearson Education Limited.

Moreta-Herrera, R., Perdomo-Pérez, M., Reyes-Valenzuela, C., Gavilanes-Gómez, D., Rodas, J. A., & Rodríguez-Lorenzana, A. (2023). Analysis from the classical test theory and item response theory of the Satisfaction with Life Scale (SWLS) in an Ecuadorian and Colombian sample. Journal of Human Behavior in the Social Environment. https://doi.org/https://doi.org/10.1080/10911359.2023.2187915

Muchlisin, M., Mardapi, D., & Setiawati, F. A. (2019). An analysis of Javanese language test characteristic using the Rasch model in R program. Research and Evaluation in Education, 5(1), 61–74. https://doi.org/10.21831/reid.v5i1.23773

Murphy, K. R., & Davidshofer, C. O. (2005). Psychological testing principles and applications (Fouth, Ed.). New Jersey: Prentice-Hall Inc.

Paek, I., & Cole, K. (2020). Using R for Item Response Theory Model Applications. United Kingdom: Routledge.

Palimbong, J., Mujasam, & Allo, A. Y. T. (2018). Item Analysis Using Rasch Model in Semester Final Exam Evaluation Study Subject in Physics Class X TKJ SMK Negeri 2 Manokwari. Kasuari: Physics Education Journal, 1(1), 43–51. https://doi.org/10.37891/kpej.v1i1.40

Pratama, G. P., & Pinayani, A. (2019). Effect of Learning Style on Learning Outcomes with Mediator Variable Learning Motivation. 3rd ICEEBA International Conference on Economics, Education, Business and Accounting, KnE Social Sciences, 2019, 808–819. https://doi.org/10.18502/kss.v3i11.4052

Primi, C., Morsanyi, K., Chiesi, F., Donati, M. A., & Hamilton, J. (2015). The Development and Testing of a New Version of the Cognitive Reflection Test Applying Item Response Theory (IRT). Journal of Behavioral Decision Making, 29(5), 453–469. https://doi.org/https://doi.org/10.1002/bdm.1883

Purwanto. (2019). Educational Goals and Learning Outcomes: Domains and Taxonomies [in Bahasa]. Jurnal Teknodik, 9(16), 146–164. https://doi.org/10.32550/teknodik.v0i0.541

Ramos, J. L. S., Dolipas, B. B., & Villamor, B. B. (2013). Higher order thinking skills and academic performance in physics of college students: A regression analysis. International Journal of Innovative Interdisciplinary Research, 4, 48–60.

Retnawati, H. (2013). Evaluation of educational programs [in Bahasa]. Jakarta: Universitas Terbuka.

Retnawati, H. (2014). Item response theory and its applications for researchers, measurement and testing practitioners, graduate students [in Bahasa]. Yogyakarta: Nuha Medika.

Retnawati, H., & Hadi, S. (2014). The regional bank system is calibrated to welcome the era of decentralization [in Bahasa]. Jurnal Ilmu Pendidikan, 20(2), 183–193. https://doi.org/10.17977/jip.v20i2.4615

Rizbudiani, A. D., Jaedun, A., Rahim, A., & Nurrahman, A. (2021). Rasch model item response theory (IRT) to analyze the quality of mathematics final semester exam test on system of linear equations in two variables (SLETV). Al-Jabar: Jurnal Pendidikan Matematika, 12(2), 399–412. https://doi.org/10.24042/ajpm.v12i2.9939

Santoso, A., Kartianom, K., & Kassymova, G. K. (2019). Quality of statistics question bank items (Case study: Open University statistics course final exam instrument) [in Bahasa]. Jurnal Riset Pendidikan Matematika, 6(2), 165–176. https://doi.org/10.21831/jrpm.v6i2.28900

Sarea, M. S., & Ruslan, R. (2019). Characteristics of Question Items: Classical Test Theory vs Item Response Theory? [in Bahasa]. Didaktika : Jurnal Kependidikan, 13(1), 1–16. https://doi.org/10.30863/didaktika.v13i1.296

Sarvina, Y. (2017). Utilization of Open Source "R" Software for Agroclimate Research [in Bahasa]. Informatika Pertanian, 26(1), 23–30. https://doi.org/10.21082/ip.v26n1.2017.p23-30

Shanti, M. R. S., Istiyono, E., Munadi, S., Permadi, C., Patiserlihun, A., & Sudjipto, D. N. (2020). Physics Question Assessment Analysis Using the Rasch Model with the R Program [in Bahasa]. Jurnal Sains Dan Edukasi Sains, 3(2), 46–52. https://doi.org/10.24246/juses.v3i2p46-52

Subali, B., Kumaidi, Aminah, N. S., & Sumintono, B. (2019). Student Achievement Based On The Use Of Scientific Method In The Natural Science Subject In Elementary School. Jurnal Pendidikan IPA Indonesia, 8(1), 39–51. https://doi.org/10.15294/jpii.v8i1.16010

Sudaryono. (2011). Implementation of Item Response Theory in the Assessment of Final Learning Outcomes in Schools [in Bahasa]. Jurnal Pendidikan Dan Kebudayaan, 17(6), 719–732. https://doi.org/10.24832/jpnk.v17i6.62

Talebi, G. A., Ghaffari, R., Eskandarzadeh, E., & Oskouei, A. E. (2013). Item Analysis an Effective Tool for Assessing Exam Quality, Designing Appropriate Exam and Determining Weakness in Teaching. Res Dev Med Educ, 2(2), 69–72. https://doi.org/10.5681/rdme.2013.016

Thepsathit, P., Jongsooksai, P., Bowornthammarat, P., Saejea, V., & Chaimongkol, N. (2022). Data Analysis in Polytomous Item Response Theory Using R. Journal of Educational Measurement, 28(2), 13–26.

Tilaar, A. L. F., & Hasriyanti. (2019). Analysis of Odd Semester Question Items in Mathematics Subjects in Junior High Schools [in Bahasa]. JP3I (Jurnal Pengukuran Psikologi Dan Pendidikan Di Indonesia), 8(1), 57–68. https://doi.org/10.15408/jp3i.v8i1.13068

Tirta, I. M. (2015). Development of online interactive item response analysis using R for dichotomous responses with logistic models (1-PL, 2-PL, 3-PL) [in Bahasa]. Seminar Nasional Pendidikan Matematika. FKIP Universitas Jember.

Ulitzsch, E., Davier, M. von, & Pohl, S. (2020). A Multiprocess Item Response Model for Not- Reached Items due to Time Limits and Quitting. Educational and Psychological Measurement, 80(3), 522–547. https://doi.org/10.1177/0013164419878241

Winarno, Zuhri, M., Mansur, Sutomo, I., & Widhyahrini, K. (2019). Development of Assessment for the Learning of the Humanistic Model to Improve Evaluation of Elementary School Mathematics. International Journal of Instruction, 12(4), 49–64. https://doi.org/10.29333/iji.2019.1244a

Wu, M., Tam, H. P., & Jen, T.-H. (2016). Educational measurement for applied researchers. Singapore: Springer Singapore.

Yamamoto, K., Shin, H. J., & Khorramdel, L. (2018). Multistage Adaptive Testing Design in International Large-Scale Assessments. Educational Measurement: Issues and Practice, 0(0), 1–12. https://doi.org/10.1111/emip.12226

Zainuddin, Z. (2018). Students’ learning performance and perceived motivation in gamified flipped-class instruction. Computers & Education, 126, 75–88. https://doi.org/10.1016/j.compedu.2018.07.003